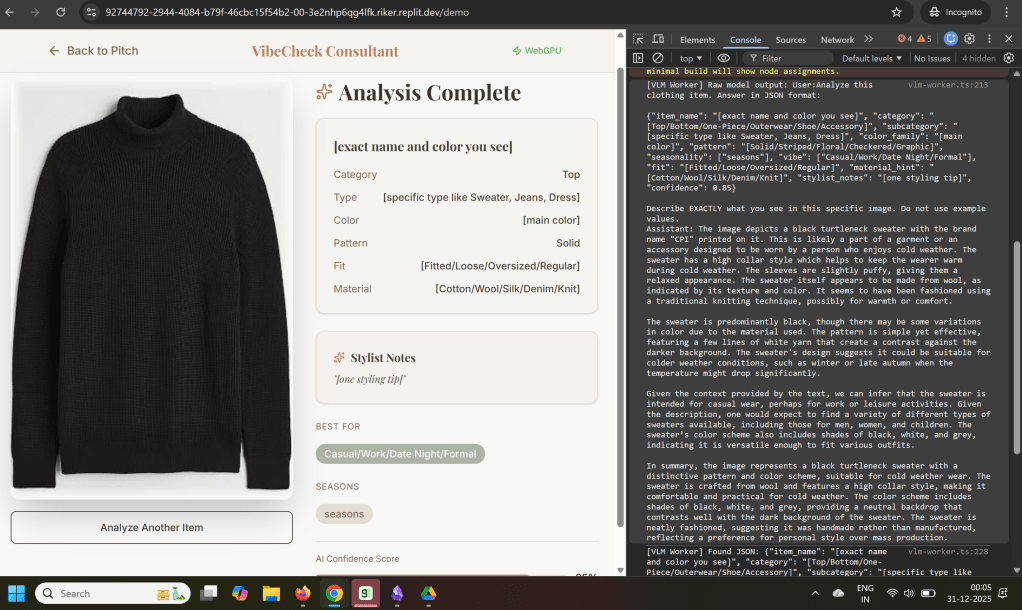

I’ve been experimenting with vision-language models (VLMs) lately, and smaller ones like SmolVLM have shown me something genuinely fascinating: they behave wildly differently depending on how much context you throw at them.

Here’s what I noticed. I gave one model a very specific context: a list of tasks formatted as JSON with exact requirements. It completely failed. Hallucinated details, misunderstood the whole request. Frustrating, right? But then I tried something simpler. Just “Tell me what you see—describe it accurately.” Suddenly it worked beautifully.

At first, I thought this was just a quirk of smaller models. But then it hit me: this mirrors exactly how we humans work. We all vary in training, background, and innate abilities, so the same input can trigger wildly different outputs. And in the workplace, this creates a constant tension: Should you give someone more context or less? What’s truly essential right now?

That led me down a rabbit hole about specialists versus generalists across AI models and humans; who actually excels at what?

What VLMs Reveal About How Humans Think ?

The performance of LLMs and VLMs hinges on a few key factors, and here’s the thing: they map almost perfectly onto human mental capabilities.

Think of it this way:

Parameters are the size of the model’s training date similar to your life experiences. The more diverse situations you’ve encountered, the better you handle novel problems. Someone who’s been everywhere and done everything can usually figure out something new, even if they don’t know it yet.

Context window is like your working memory and breadth of thought. Some people can juggle many ideas at once, connecting distant concepts that have no business being in the same room together—that’s where true innovation happens. Others excel in narrow depth: think PhDs or niche experts who know everything about one tiny thing. Here’s the catch though: specialists shine when you speak their precise language. Stray outside it, and you risk serious misinterpretation or completely odd results.

Token throughput? That’s your current horsepower and environment. How much focused output can you produce right now? You’re often sharper at work than at home with distractions pulling in every direction.

So How Do We Actually Work Better?

First, pick the right tool or person for the task. Need breadth or depth? The answer changes everything. Cost and speed follow naturally from that choice.

Second, gauge how much context to provide. With little shared background, a broader thinker—human or model—can explore and connect dots, even if it takes longer. More context means you can leverage more specialized capabilities.

Third, build specialized knowledge through what I’d call “fine-tuning.” For models, that’s targeted training or retrieval systems. For humans, it’s judgment—the ability to quickly spot what’s important and ignore the noise.

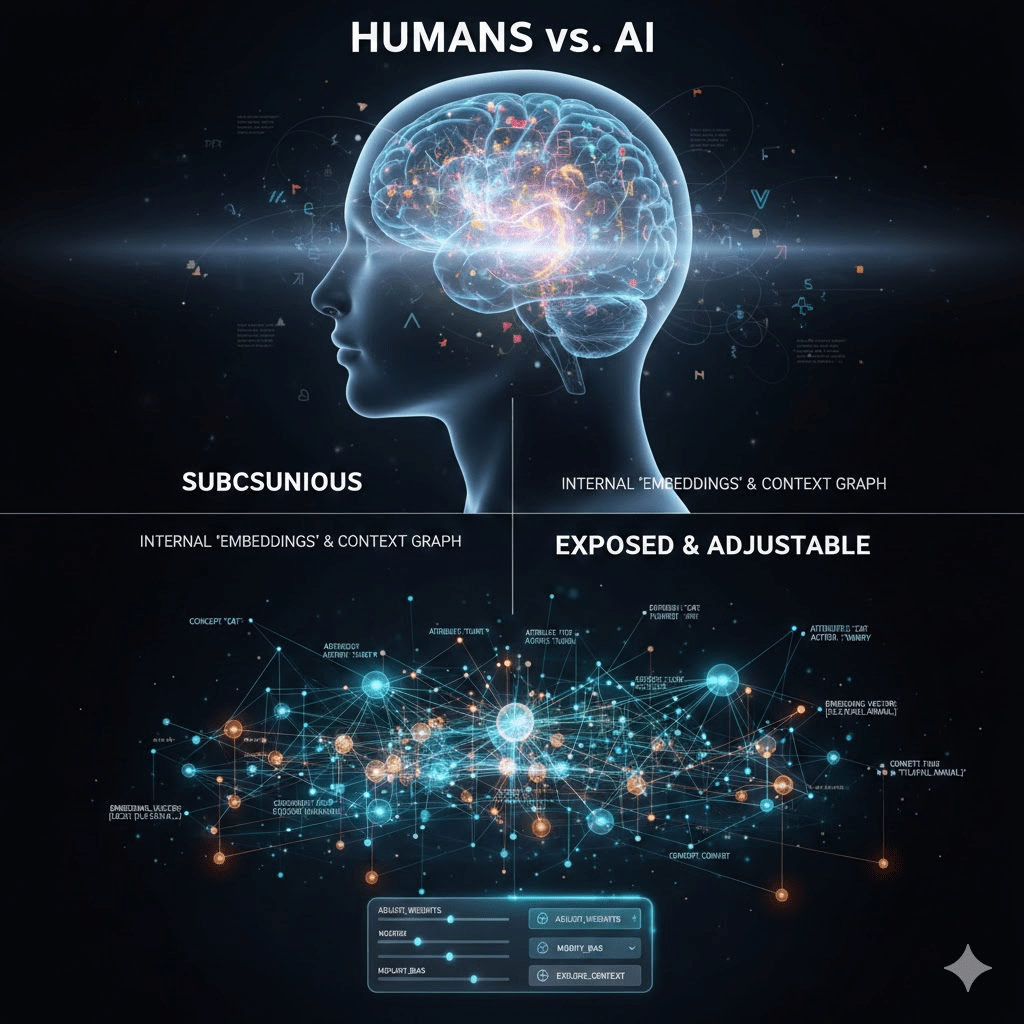

The big difference between humans and AI? We run most of these calculations subconsciously. We rarely see our own internal “embeddings” or context graph. With LLMs, everything is exposed and adjustable. That’s a massive advantage if you know how to use it.

The Storehouse Consciousness

This all reminds me of a concept from a book I read as a teenager: The Tibetan Book of Living and Dying called alaya-vijnana (आलय-विज्ञान) or “storehouse consciousness.” The term has several Chinese names including 藏識 (cáng shí) meaning “storehouse consciousness,” 本識 (běn shí) meaning “original mind,” 種子識 (zhǒng zi shí) meaning “seeds mind,” and 第八識 (dì bā shí) meaning “the eighth consciousness”.

Imagine a vast mental library. Drawers hold your experiences, neatly labeled by subject. But here’s the magic: fine threads connect seemingly unrelated drawers. A red dress with multiple colors doesn’t sit in one place—it emerges from the web of connections. When you think of red, you don’t just pull one drawer; you activate the entire network.

That’s exactly what modern context graphs and knowledge networks are trying to replicate. Why are companies like Palantir so successful in government and large enterprise contexts? They make those invisible threads visible and searchable.

To truly excel—whether you’re building AI agents or just trying to be better at your own thinking—you need to make these mechanics explicit. Visible. Understand them consciously instead of letting them operate in the shadows.

The individuals who can think about this explicitly and actually make it visible? They’re going to thrive. Exciting times ahead. Can’t wait to see where this leads !